The Complete History of Graphic Cards: Evolution and Future Trends in 2024

When you purchase through links on our website, we may earn an affiliate commission.

Hey there! Ever wondered how we got from those pixelated, blocky graphics in early computer games to the jaw-dropping, hyper-realistic visuals we see today? It’s all thanks to the incredible evolution of graphic cards. The history of graphic cards is packed with groundbreaking innovations, fierce industry rivalries, and a few hiccups along the way. In fact, did you know that the first commercially successful graphic card was launched over 40 years ago? Yeah, it’s been quite the journey. So, let’s dive into the wild ride that took us from simple 2D accelerators to the powerhouse GPUs that can handle everything from gaming to AI. Buckle up. It’s gonna be a trip!

The Birth of Graphic Cards: Early Innovations and Milestones

You know, it’s funny to think about how far we’ve come when it comes to graphic cards. Back in the day, when I first started tinkering with computers, graphic cards were pretty much an afterthought. I remember my first computer, one of those old beige boxes, came with a built-in graphics solution that could barely handle solitaire, let alone anything more demanding. But man, those were simpler times. No fancy GPUs or 3D rendering, just the basics.

The very first graphic cards, the ones that set the stage for all the tech we’ve got today, were really just simple 2D accelerators. They were like the training wheels for the high-powered GPUs we use now. IBM’s Monochrome Display Adapter (MDA), which hit the market in 1981, was one of the pioneers. It didn’t even support color, but it got the job done for early business applications. I remember seeing one of those in action and thinking, “Wow, this is the future!” Funny how that feels like ancient history now.

However, the real game-changer came a few years later with the introduction of color graphics. The Color Graphics Adapter (CGA) by IBM in 1981 was a huge leap forward. Sure, it could only display four colors at once, yep, just four, but it was revolutionary for its time. I still remember the first time I saw a game running on CGA. It was a simple platformer, nothing like the games we have now, but back then, those four colors were pure magic. I even tried to hack the settings to squeeze out an extra color or two, but no luck there. It was either stick with the palette or deal with monochrome. But hey, it was progress!

As the 80s rolled on, the competition heated up. Companies like Hercules came onto the scene with their Graphics Card Plus, which offered sharper text and better graphics performance for business users. I remember hearing about it and being a bit jealous because, of course, my setup wasn’t quite that advanced. But then again, I was more into gaming than spreadsheets, so it wasn’t a total loss.

The late 80s and early 90s saw even more significant milestones. Enter VGA (Video Graphics Array) in 1987, and suddenly, we were in the big leagues. VGA could display 256 colors, which, at the time, felt like an explosion of possibilities. I’ll never forget the first time I upgraded to a VGA card. Suddenly, the games I’d been playing for years felt brand new. It was like seeing them through a whole new lens. But with that came the realization that I had no idea what I was doing when it came to installing hardware. I think I spent more time troubleshooting than actually playing, but hey, you live and learn.

Looking back, it’s amazing to see how these early innovations laid the groundwork for everything that followed. These were the building blocks, the first steps toward creating the complex, high-performance graphic cards that power today’s tech. And while I might have struggled with installations and upgrades back then, it was all worth it for those moments when the screen finally flickered to life, showing off those crisp, colorful graphics. Good times.

The Rise of 3D Graphics: Game-Changing Developments in the 1990s

Ah, the 90s was a golden era for so many things, including the rise of 3D graphics. If you were into gaming or computers back then, you’ll probably remember the buzz around 3D acceleration. It was like stepping into a whole new world. I was still pretty green with technology at the time, but even I could see that something big was happening. The jump from 2D to 3D wasn’t just a step. It was a full-on leap.

I think the first time I really grasped what 3D acceleration meant was when I saw someone playing Quake. Now, that was a game-changer. Until then, games were flat. Sure, they had depth, but everything was still kind of two-dimensional, you know? But with 3D acceleration, suddenly, it was like you were right there in the game. Quake had this incredible sense of space and movement that just blew my mind. Of course, the first time I tried to get my computer to run it, I crashed the whole system. Turns out, you need a 3D card that’s actually compatible with your machine. Who knew, right?

Companies like NVIDIA and ATI (which would later become AMD) were leading the charge, and the rivalry between them was something else. It was like watching a heavyweight boxing match, with each company trying to outdo the other with faster, more powerful cards. I remember saving up for months to buy an NVIDIA RIVA 128, which was one of the first affordable 3D accelerators. That card changed everything for me. Suddenly, I could play all the latest games, and they looked amazing. But the installation? Let’s just say it didn’t go smoothly. I had no idea what I was doing, and I spent hours on the phone with tech support. But when I finally got it working, it was like striking gold.

Shaders, textures, and all that good stuff started coming into play around this time, too. These technologies allowed for more realistic graphics, adding layers of detail that made games more immersive than ever. I remember being totally captivated by the way light and shadows played across the screen in games like Half-Life and Unreal. It wasn’t just about playing a game. It was about experiencing it.

Of course, with all this new tech came a whole new set of problems. My system started to lag, crash, and generally make life difficult whenever I pushed it too hard. I didn’t know it then, but I was running into issues with bottlenecks, where the CPU couldn’t keep up with the GPU. It took me a while to figure that one out, longer than I’d like to admit. But once I did, and after a lot of tweaking and upgrading, I finally had a system that could handle everything I threw at it. Well, almost everything.

The 90s were a time of rapid change and incredible innovation, and the rise of 3D graphics was a huge part of that. It was a wild ride, full of ups and downs, but every crash and every frustrating error message was worth it for the thrill of seeing those first 3D-rendered worlds come to life. Looking back, it’s hard not to feel a little nostalgic for those days, even if they did come with their fair share of headaches.

The 2000s: The Era of Competition and Technological Advancements

Ah, the 2000s, what a time to be alive, especially if you were into tech. The GPU wars between NVIDIA and ATI were in full swing, and as someone who was always trying to keep up with the latest and greatest, it felt like I was constantly upgrading my rig just to stay in the game. But honestly, that’s part of what made it so exciting. Every year, it seemed like there was a new card that could do things you didn’t even know were possible. And, of course, I was determined to get my hands on the best one.

I remember when NVIDIA launched the GeForce 256 in 1999, and it was dubbed the world’s first GPU. Now, this was a big deal. Before this, most people, myself included, didn’t even know what a GPU was, let alone why it mattered. However, GeForce 256 introduced us to the concept of transforming and lighting (T&L) on the GPU, which took a huge load off the CPU. It meant games could run smoother, with better graphics, and without tanking your system’s performance. I was pretty skeptical at first. After all, how much difference could one card make? But after I finally upgraded, I was hooked. Games that had been choppy or slow before suddenly ran like a dream. Of course, that “dream” quickly turned into a nightmare when my power supply couldn’t handle the new card, and I ended up frying half my system. Yeah, not my finest moment.

Then there was the Radeon 9700 Pro, launched by ATI in 2002. This card was a beast, and it marked ATI’s comeback in a big way. It was the first GPU to support DirectX 9, and it completely changed the game for PC graphics. I remember my friend, who was a die-hard NVIDIA fan, begrudgingly admitting that the Radeon 9700 Pro was the real deal. I ended up getting one, and let me tell you, it was worth every penny. Suddenly, I was able to play games at higher resolutions with all the settings cranked up, and it looked amazing. But there was a catch: my CPU couldn’t keep up, so while the graphics were stunning, the framerate was… well, less than ideal. That led to a whole new round of upgrades and a lot of swearing at my bank account.

One of the biggest advancements in the 2000s was the evolution of APIs, like DirectX and OpenGL. These were the building blocks that allowed developers to create the stunning visuals we’ve come to expect from modern games. I didn’t really understand how APIs worked back then. I just knew that when a new version of DirectX came out, it was time to start saving up for the next big GPU release. And, of course, that meant more time spent tinkering with settings, trying to squeeze every last drop of performance out of my system. I think I spent more time tweaking and optimizing than I did actually playing games, but that was half the fun.

Another major development during this era was the introduction of GPGPU (General-Purpose computing on Graphics Processing Units). Now, this was a concept that really blew my mind. The idea that GPUs could be used for more than just graphics, that they could handle complex calculations and help with tasks like physics simulations or even scientific research, was pretty wild. I didn’t get into GPGPU myself, but I remember reading about it and thinking, “This is the future.” And sure enough, it’s become a huge part of how we use GPUs today, from AI to cryptocurrency mining.

The 2000s were a time of rapid advancement and fierce competition in the GPU market, and while it wasn’t always easy to keep up, it was definitely worth the effort. I still look back on those days with a mix of nostalgia and relief: nostalgia for the excitement of trying out the latest hardware and relief that I don’t have to worry about upgrading every few months anymore. Well, at least not as often.

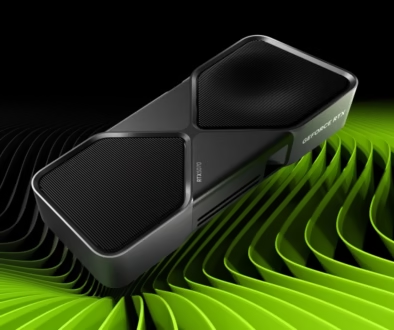

The Modern Era: GPUs Beyond Gaming

So here we are in the modern era, where GPUs have gone way beyond just gaming. Honestly, if you’d told me back in the 90s that graphic cards would one day be used for everything from AI research to cryptocurrency mining, I probably would’ve laughed. But that’s where we are now, and it’s been a wild ride getting here.

One of the biggest shifts in recent years has been the use of GPUs for AI and machine learning. I first heard about this a few years ago, and it kind of blew my mind. I mean, I knew GPUs were powerful, but I’d always thought of them as something you used for gaming or maybe video editing, never for something as complex as AI. But it turns out that GPUs are incredibly well-suited for the kinds of calculations needed in machine learning. It’s all about parallel processing, where the GPU can handle thousands of calculations at once. I’ve got a buddy who works in AI research, and he’s always going on about how his work would be impossible without the power of modern GPUs. It’s crazy to think about how far we’ve come. These cards aren’t just for fun anymore; they’re changing the world.

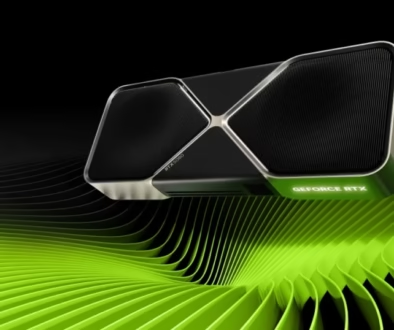

Another major development has been the rise of ray tracing, which is a technique for creating incredibly realistic lighting effects in games and other visual media. Now, this is something I can definitely get behind. I remember the first time I saw a demo of ray tracing in action, it was a scene from some new game, and the way the light reflected off the water and bounced around the environment was just stunning. It was like nothing I’d ever seen before. Of course, the first time I tried to run a ray-traced game on my system, it nearly melted my GPU. Yeah, turns out you need some serious horsepower to handle ray tracing, and my setup just wasn’t up to the task. That was a tough lesson to learn, but it gave me a new appreciation for just how far GPU technology has come.

And then there’s cryptocurrency mining, which has been both a blessing and a curse for GPU enthusiasts like me. On the one hand, it’s fascinating to see how GPUs are being used to solve complex mathematical problems and generate cryptocurrency. On the other hand, it’s driven up the price of graphic cards like you wouldn’t believe. I remember trying to buy a new GPU a few years ago, only to find that prices had doubled, or even tripled, because of the demand from miners. It was frustrating, to say the least. But at the same time, it’s a testament to just how versatile and powerful these cards have become. They’re not just for playing games anymore. They’re tools for all kinds of cutting-edge applications.

Of course, GPUs are still a big deal in the gaming world, too. But it’s been interesting to see how they’re being used in professional industries as well. I’ve got a friend who’s a video editor, and he swears by his high-end GPU for handling all the complex rendering and effects work he does. It’s not just video editing; graphic designers, 3D artists, and even scientists are all using GPUs to push the boundaries of what’s possible in their fields. It’s pretty cool to see how something that started as a way to improve gaming has evolved into a tool for serious professional work.

Looking back, it’s amazing to see how much GPUs have changed over the years. They’ve gone from being a niche product for gamers and tech enthusiasts to being a vital part of so many different industries. And while I’ll always have a soft spot for the gaming side of things, it’s exciting to think about all the other ways these powerful little cards are being used. The future is looking bright, and it’s all thanks to the incredible evolution of the humble graphic card.

The Future of Graphic Cards: What’s Next?

So, what’s next for graphic cards? Well, if there’s one thing I’ve learned over the years, it’s that you can never predict exactly where technology is going to go. But that doesn’t mean we can’t have a little fun speculating, right?

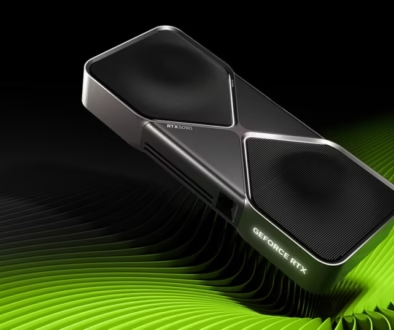

One of the big things on the horizon is the continued development of AI-driven GPUs. We’ve already seen how GPUs are being used in AI research, but this is just the beginning. I think we’re going to see a lot more integration of AI into the GPUs themselves, which could lead to some pretty incredible advancements. Imagine a GPU that can optimize its own performance on the fly, adapting to the specific needs of whatever application you’re running. That’s the kind of thing we’re talking about. It’s a little scary, sure; there’s always that fear of machines getting too smart for their own good, but it’s also incredibly exciting. I can’t wait to see what these AI-powered GPUs will be capable of.

Another trend that’s been gaining traction is the push for more sustainable and energy-efficient GPUs. This is something that’s been on my mind for a while now, especially with all the talk about climate change and the environmental impact of technology. GPUs, especially the high-end ones, can be real power hogs, and that’s not great for the planet. But I’ve been seeing more and more companies starting to focus on reducing the energy consumption of their graphic cards, which is a step in the right direction. We’re already seeing some innovative cooling solutions, like liquid cooling and more efficient fan designs, that help reduce the heat output and power usage of GPUs. It’s not going to solve the problem overnight, but it’s encouraging to see the industry taking this seriously.

And, of course, we can’t talk about the future of graphic cards without mentioning the ongoing advancements in gaming technology. Ray tracing is still relatively new, and while it’s impressive, it’s also pretty demanding on hardware. But as GPUs continue to evolve, I think we’re going to see more games fully embracing ray tracing and other advanced rendering techniques. We’re also starting to see more focus on real-time rendering, which could lead to some incredibly lifelike graphics in the near future. I’m not sure my wallet is ready for the kind of upgrades these advancements are going to require, but hey, that’s the price we pay for progress, right?

Another thing that’s worth keeping an eye on is the potential for GPUs to be used in entirely new ways. We’ve already seen how they’ve been repurposed for tasks like AI research and cryptocurrency mining, but who knows what else they could be capable of? I’ve heard some talk about GPUs being used in medical imaging and even space exploration. It’s all pretty speculative at this point, but it’s exciting to think about the possibilities. Who knows, maybe one day, the graphic card you’re using to play the latest game could also be helping to map out distant galaxies. That’s the kind of future I’d love to see.

So, yeah, the future of graphic cards is looking pretty bright. There are still plenty of challenges to overcome, especially when it comes to things like sustainability and accessibility, but the potential is there for some truly amazing advancements. As someone who’s been following the evolution of GPUs for a long time now, I can’t wait to see where things go from here. Whatever happens, one thing’s for sure: it’s going to be one heck of a ride.

Conclusion

So there you have it, the wild and wonderful history of graphic cards, from their humble beginnings to the cutting-edge tech we use today. It’s been quite a journey, full of twists and turns, and it’s not over yet. Whether you’re a gamer, a designer, or just a tech enthusiast, there’s no denying the impact that graphic cards have had on the world of computing. And as we look to the future, there’s no telling what new innovations are just around the corner. So, what about you? Do you have any fond (or not-so-fond) memories of your own GPU adventures? Share your stories in the comments. Let’s keep the conversation going!

Choosing the Right Graphics Card for Your Gaming PC Build -

August 28, 2024 @ 1:36 pm

[…] you’re interested in the evolution of these powerful components, check out our article on The Complete History of Graphic Cards: Evolution, Milestones, and Future Trends in 2024. It’s fascinating to see how far we’ve […]

Ray Tracing vs. DLSS: Which Technology Impacts FPS More in 2024?

January 19, 2025 @ 7:45 am

[…] we look to the future of graphics technology, it’s fascinating to consider the complete history of graphic cards and how far we’ve come. The evolution from basic 2D rendering to complex ray tracing and AI […]